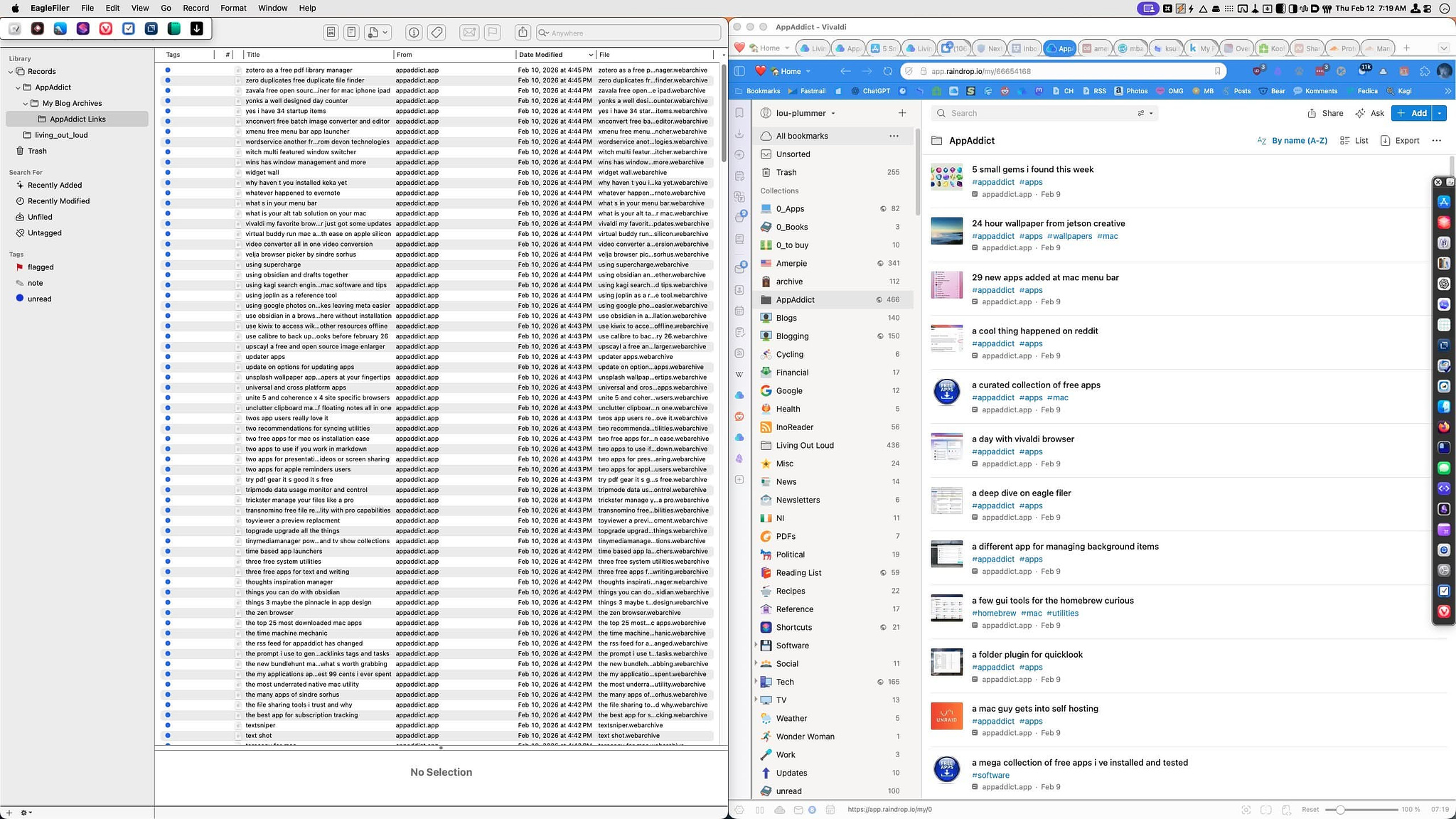

Turning AppAdddict into a Fully Searchable Archive with Integrity, Raindrop, and EagleFiler

Mac Apps

As an App Addict, I enjoy testing new tools and watching indie developers invent clever ways to get things done. But collecting apps isn't the goal. The real satisfaction comes when those tools solve an actual problem. Here's a recent workflow I built using apps I've reviewed on this blog.

The Problem

I spend a fair amount of time in r/MacApps, on Mastodon, and in email threads talking software with other nerds. I've reviewed hundreds of apps, and I'm often asked for links to older posts. Until recently, I had two options:- Run a site-specific search on Kagi

- Browse or search the archive section of my blog

The Goal

I wanted two things:- A fully searchable offline index of all 469 reviews -- including their public URLs

- An online, full-text--searchable index of the entire site without manually building one

The Tools

- Integrity -- A free crawler that can extract every URL on a domain when configured correctly

- A CSV editor like Delimited, Easy CSV, or (if you must) Excel or Google Sheets

- My Python script: CSV to Safari Bookmarks

- A Raindrop.io account -- a full-featured bookmark manager with Mac and iOS apps

- EagleFiler -- a personal knowledge base that can create local web archives from any URL

The Process

1. Crawl the Site I fed the base URL of my blog into Integrity and configured it to return only one instance of each URL. The crawl took about five minutes and produced a sortable list of every post URL on my site. No guesswork. No missed pages.2. Export to CSV I copied the list of URLs from Integrity, pasted them into Easy CSV, and saved the result as a properly formatted CSV file. At this point, I had a clean dataset: one row per post, one URL per row.

3. Convert to Safari Bookmarks I opened Ghostty and ran my Python script: CSV to Safari Bookmarks The script converts the CSV into a Safari-compatible bookmarks file. Simple transformation, clean output.

4. Import into Raindrop.io In Raindrop, I chose Import Bookmarks -- not "Import File." That distinction matters. The bookmarks import preserves structure correctly. Raindrop then pulled in every post.

5. Import into EagleFiler In EagleFiler, I selected: File → Import Bookmarks EagleFiler fetched each URL and created a local web archive for every post. No manual downloading. No copy/paste gymnastics.

The Result

Raindrop.io Raindrop created a collection containing every post on my site. Because it performs full-text indexing, searches aren't limited to titles. I can search for an obscure phrase buried deep in an article and still surface the right post. It also stores a permanent copy of each page. If my hosting provider disappears tomorrow, I still have an offsite archive. EagleFiler EagleFiler downloaded and archived every URL as a standalone web archive file. A web archive is a single file containing the full page -- text, images, links, styling. It's searchable, portable, and completely offline. Now I have:- Full-text search online (Raindrop)

- Full-text search offline (EagleFiler)

- Public URLs attached to every entry

- Redundant archival copies

Why Not Just…

…Use My CMS Search? CMS search works until it doesn't. It requires being online, depends on whatever indexing logic your platform uses, and doesn't give you a portable dataset you control. I wanted something I could manipulate, migrate, or repurpose independently of my hosting stack.…Search the Markdown Files Directly? I can -- and I do. But Markdown files don't include the canonical public URL. When someone asks for a link, I need that immediately. This workflow preserves the published URLs alongside searchable content.

…Export the Database? That's fine if you're running WordPress. I'm not. And even if I were, a database dump is not a clean, portable, human-friendly index. It's raw tables. I wanted something that integrates with tools I already use daily.

…Use a Browser Bookmark Export? That only captures what I've manually bookmarked. I wanted a complete, authoritative list of everything published -- no gaps and no reliance on memory. Integrity gives me the ground truth.

…Install a Static Site Search Tool? Client-side search libraries are great for readers. This wasn't about improving the reader experience. It was about fixing my own workflow across online search, offline access, and long-term archiving.

This system gives me:

- Full-text search online via Raindrop.io

- Full-text search offline via EagleFiler

- Portable structured data via CSV to Safari Bookmarks

- A reproducible crawl using Integrity

.svg.png)